Large Language models (LLMs) have revolutionized the way we interact with text, opening up a world of possibilities for various applications. Whether you want to automate text generation, enhance natural language understanding, or create interactive and intelligent user experiences.

The landscape is filled with a diverse range of powerful LLMs from various providers. OpenAI, Google, AI21, HuggingfaceHub, Aleph Alpha, Anthropic, and numerous open-source models offer different capabilities and strengths. However, integrating these models can be a time-consuming and challenging task due to their unique architectures, APIs, and compatibility requirements.

That’s where llm-client and LangChain come into play. These LLM integration tools provide a streamlined approach to incorporating different language models into your projects. They simplify the integration process, abstracting away the complexities and nuances associated with each individual LLM. With llm-client and LangChain, you can save valuable time and effort that would otherwise be spent on understanding and integrating multiple LLMs.

By using llm-client or LangChain, you gain the advantage of a unified interface that enables seamless integration with various LLMs. Rather than dealing with the intricacies of each model individually, you can leverage these tools to abstract the underlying complexities and focus on harnessing the power of language models for your specific application.

In the following sections, we will delve deeper into the features, advantages, and considerations of llm-client and LangChain, shedding light on how they simplify LLM integration and empower you to make the most informed decisions for your projects.

Disclaimer: I am the author of llm-client. While I have strived to present a balanced comparison between llm-client and LangChain, my perspectives may be influenced by my close association with the development of llm-client.

LLM-Client and LangChain

llm-client and LangChain act as intermediaries, bridging the gap between different LLMs and your project requirements. They provide a consistent API, allowing you to switch between LLMs without extensive code modifications or disruptions. This flexibility and compatibility make it easier to experiment with different models, compare their performance, and choose the most suitable one for your project.

LangChain, a reputable player in the field, is known for its wide range of features like generic interface to LLMs (the one we are talking about), framework to help you manage your prompts, central interface to long-term memory, Indexes, Chains of LLMs, and other agents for tasks an LLM is not able to handle (e.g., calculations or search). It has a huge community support system (over 50K stars on GitHub as the write of this blog).

You can find out more about LangChain in this blog post

LLM-Client, on the other hand, is specifically engineered for Large Language Models (LLMs) integration. It is praised for its user-friendly interface and focus on removing integration complexities, providing developers with a seamless experience.

Unpacking Their Advantages

LangChain: Community Support and Simple Non-Async Usage

LangChain’s expansive community serves as a significant advantage. Additionally, LangChain excels with its straightforward support for non-async usage.

To generate text with LangChain, you can use the following code:

pip install langchain[llms]

import os

from langchain.llms import OpenAI # Or any other model avilable on LangChain

os.environ["OPENAI_API_KEY"] = ... # insert your API_TOKEN here

llm = OpenAI(model_name="text-ada-001", n=2, best_of=2) # Here you can pass addtinal params e.g temperature, max_tokens etc.

llm("Tell me a joke")

For async text generation (only available for some of the models), the following code can be used:

await llm.agenerate(["Hello, how are you?"])

LangChain also supports generating embeddings:

from langchain.embeddings.openai import OpenAIEmbeddings # Or any other model avilable on LangChain

embeddings = OpenAIEmbeddings()

query_result = embeddings.embed_query(text)

doc_result = embeddings.embed_documents([text])

LLM-Client: Performance, Flexibility

In contrast, the llm-client offers a convenient wrapper with standardized parameters, enabling developers to bypass complicated setups or inconsistent configurations. This tool is particularly designed for seamless integration with LLMs, eliminating unnecessary features or dependencies.

Furthermore, the llm-client provides developers greater control over the ClientSession, paving the way for future asynchronous capabilities. Here’s how to perform text completion asynchronously with the llm-client:

pip install llm-client[api]

import os

from aiohttp import ClientSession

from llm_client import OpenAIClient, LLMAPIClientConfig # Or any other model avilable on llm-client

async with ClientSession() as session:

llm_client = OpenAIClient(LLMAPIClientConfig(os.environ["API_KEY"], session, default_model="text-ada-001")

text = "This is indeed a test"

print("generated text:", await llm_client.text_completion(text, n=2, best_of=2)) # Here you can pass addtinal params e.g temperature, max_tokens etc.

LLM-Client also supports embeddings:

await llm_client.embedding(text)

You can do the above without async:

from llm_client import init_sync_llm_api_client

llm_client = init_sync_llm_api_client(LLMAPIClientType.OPEN_AI, api_key=os.environ["API_KEY"],

default_model="text-ada-001")

text = "This is indeed a test"

llm_client.text_completion(text, n=2, best_of=2)

llm_client.embedding(text)

Feature Comparison

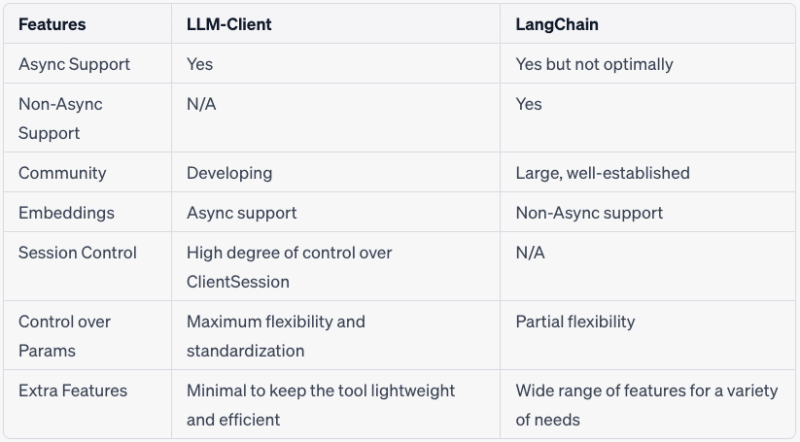

To provide a more clear comparison, let’s look at a side-by-side feature comparison of both tools:

Summary

While both LangChain and the llm-client present unique strengths, your choice should be based on your specific requirements and comfort level. LangChain’s substantial community and straightforward non-async usage may suit developers looking for a collaborative environment and simpler synchronous operations. Conversely, the llm-client’s performance, flexibility and purposeful design for LLM integration make it an excellent choice for those seeking maximum control, efficient and streamlined workflows.

By understanding your needs and examining the capabilities of these tools, you’ll be better equipped to make an informed decision. Remember, the best tool is the one that most effectively serves your purpose. Happy coding!

Top comments (0)