I have a lambda function that's taking a few seconds to execute, and that's not related to a cold start. It's the code in the handler function that's taking time, and that's because it's CPU and memory intensive.

Performance problems related to cold start can be enhanced through provisioned concurrency and managing the "Init" code. You can read about this in my previous articles:

- How to speed up your Lambda function

- Effortlessly Juggling Multiple Concurrent Requests in AWS Lambda

We know the performance is related to CPU and Memory, so the solution might be as simple as increasing the memory. Isn't it?

Let's do a small demonstration comparing the execution of the Lambda with different memory configurations. It's worth noting that we cannot explicitly control the Lambda CPU; it's automatically increased as we increase the memory. From the AWS docs:

The amount of memory also determines the amount of virtual CPU available to a function. Adding more memory proportionally increases the amount of CPU, increasing the overall computational power available. If a function is CPU-, network- or memory-bound, then changing the memory setting can dramatically improve its performance.

Testing the performance of a Lambda function

For this demo let's use the following Lambda function that I generated with the help of ChatGPT:

export const handler = async (event) => {

const matrixSize = 100; // Size of the square matrix

const matrix = generateMatrix(matrixSize);

const result = performMatrixMultiplication(matrix);

return result;

};

function generateMatrix(size) {

const matrix = [];

for (let i = 0; i < size; i++) {

const row = [];

for (let j = 0; j < size; j++) {

row.push(Math.random());

}

matrix.push(row);

}

return matrix;

}

function performMatrixMultiplication(matrix) {

const size = matrix.length;

const result = new Array(size);

for (let i = 0; i < size; i++) {

result[i] = new Array(size);

for (let j = 0; j < size; j++) {

let sum = 0;

for (let k = 0; k < size; k++) {

sum += matrix[i][k] * matrix[k][j];

}

result[i][j] = sum;

}

}

return result;

}

The Lambda function generates a large square matrix, performs matrix multiplication, and returns the resulting matrix. That's an example of a CPU and memory-intensive function.

Lambda with 128 MB memory

- Create this function manually in the AWS console, selecting NodeJs as the runtime, and keep the rest of the configuration to the default. This will create the Lambda with a

128 MBmemory. - Execute the function by navigating to the "Test" tab and clicking the "Test" button.

Notice the results:

Duration: 298.55 ms Billed Duration: 299 ms Memory Size: 128 MB Max Memory Used: 72 MB Init Duration: 160.36 ms

We got a duration of 298.55 ms.

Lambda with 256 MB memory

- In the configuration tab, update the Lambda memory to

256 MB - Test the lambda function

Notice the results:

Duration: 133.94 ms Billed Duration: 134 ms Memory Size: 255 MB Max Memory Used: 73 MB Init Duration: 162.88 ms

We got a duration of 133.94 ms by doubling the memory.

Lambda with 512 MB memory

- In the configuration tab, update the Lambda memory to

512 MB - Test the lambda function

Notice the results:

Duration: 51.12 ms Billed Duration: 52 ms Memory Size: 512 MB Max Memory Used: 73 MB Init Duration: 144.95 ms

We got a duration of 51.12 ms by doubling the memory.

Lambda with 1024 MB memory

- In the configuration tab, update the Lambda memory to

1024 MB - Test the lambda function

Notice the results:

Duration: 30.42 ms Billed Duration: 31 ms Memory Size: 1024 MB Max Memory Used: 73 MB Init Duration: 163.51 ms

We got a duration of 30.42 ms by doubling the memory

Shall we continue to increase the memory?

We have demonstrated that more memory means faster execution.

At some point, the increased memory, which comes with an increased CPU won't cause better performance. But for now, let's accept that a 30.42 ms execution time is reasonable. It is worth noting that the Memory used in all execution was 73MB, so it's the CPU that was improving with the memory upgrades.

How to choose a memory config?

As with any software configuration, there are some trade-offs. In this case, it's the cost of Execution.

Lambda is priced based on number of requests and the duration of requests. In addition to that, the price of request duration is dependent on the memory associated with the Lambda function. From the AWS Docs:

Memory (MB) Price per 1ms

128 $0.0000000021

512 $0.0000000083

1024 $0.0000000167

1536 $0.0000000250

2048 $0.0000000333

3072 $0.0000000500

4096 $0.0000000667

5120 $0.0000000833

6144 $0.0000001000

7168 $0.0000001167

8192 $0.0000001333

9216 $0.0000001500

10240 $0.0000001667

So how can we find a good balance between memory and cost?

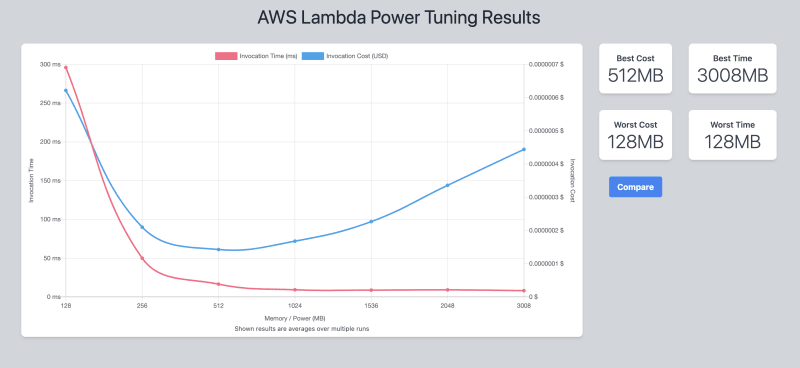

The aws lambda power tuning tool helps optimise the Lambda performance and cost in a data-driven manner. Let's try it out:

- There are multiple ways to deploy the application into the AWS account. We'll deploy it from the AWS Serverless Application Repository as that's the simplest option.

- The deployment of this serverless application will create a step function starting with the name

powerTuningStateMachine - Start the execution of the step function and pass the following JSON:

{

"lambdaARN": "your-lambda-function-arn",

"powerValues": [128, 256, 512, 1024, 1536, 2048, 3008],

"num": 50,

"payload": {},

"parallelInvocation": true,

"strategy": "cost"

}

- Once executed this will generate the following output in the "Execution input and output" tab of the step function:

{

"power": 512,

"cost": 1.428e-7,

"duration": 16.566666666666666,

"stateMachine": {

"executionCost": 0.00033,

"lambdaCost": 0.00011801685000000001,

"visualization": "https://lambda-power-tuning.show/XXXX"

}

}

The visualisation looks as follows:

This graph shows that memory of 512 MB would offer a good balance of cost and performance.

Thanks for reading this far. Did you like this article, do you have feedback or would like to further discuss the topic? Please reach out on Twitter or LinkedIn.

Top comments (2)

Nice article, informative!

Very cool article!