Pipeless is an easy to use open source multimedia framework with a focus on AI.

Developers can build and deploy apps that manipulate audio and video in just minutes by allowing them to concentrate on the code, not on building and maintaining processing pipelines.

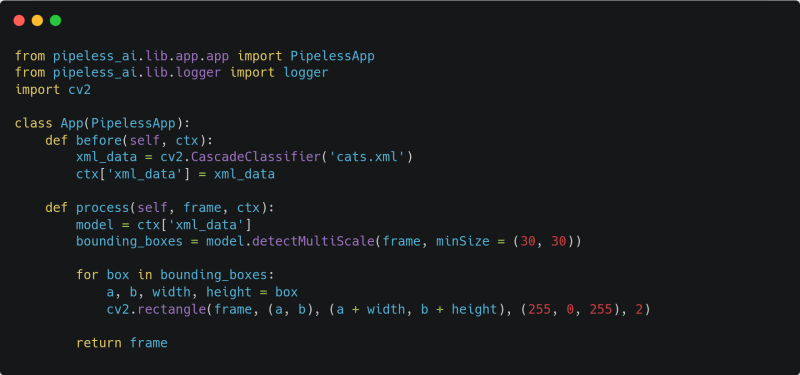

For example, just with these simple lines of code:

You can build an application that can recognize cats in any video:

The rest of this article explains in detail what Pipeless is and how to use it. Do you prefer playing with the code to reading? Here is the repo with ready to run examples, including the examples/cats directory.

The Problem

AI/ML has taken the programming world by storm in the past few years. The number of public, freely available Computer Vision (CV) models is increasing really fast. Training over custom datasets is becoming easier every day, with transfer learning techniques and libraries like Keras or PyTorch everyone can train a model within hours.

However, the actual processing of multimedia is still really hard. For example, all these CV models use RGB/BGR arrays as image input. If you want to use them with a picture, it is straightforward to convert it to an RGB array. In the case of a video, things start to get complicated really fast. They get relatively complex when developing locally, but it becomes even harder if you try to deploy a service that, for example, waits for a stream and when it arrives processes it in real-time to produce some data or modify the stream itself. Not to mention about handling different input media formats, streams encoded with different codecs, etc.

How Pipeless works

Let's use the computer vision example to explain in more detail the pain outlined in the previous section. Imagine you are building an app that detects cats on a video (this is one of the ready-to-run examples). You will need to:

- Demux the video: open the container (for example mp4) to extract the individual streams (video, audio, subtitles, etc)

- Decode each stream: convert the encoded stream into a raw stream. In the case of a mp4 video stream this is equivalent to take the H.264 encoded frames and convert them into RGB frames.

- Process the video: you can now provide the RGB image as input of our computer vision model, for example, to obtain bounding boxes and then modify the frame by drawing a square over the cat face.

- Encode the stream: exactly the opposite of the decoding step. You have to pass the frames through an H.264 codec.

- Mux the new video: take our H.264 encoded video stream, take the audio stream again and create a mp4 container for them, including presentation timestamps for syncing the streams.

The steps outlined above are the most basic pipeline you can build to work with video locally. There are some tools already, like OpenCV, that make it easy to iterate over the frames of a video within a for loop. That's great for learning and experimenting, however, not suitable for real apps running in production.

At some point, you will need to deploy your app. Let's imagine you have a garden and a camera with a motion sensor and you want to know where your cat is at any moment. The camera will send video when it detects some motion. You will need to extend the basic pipeline above so it is able to recognize when there is a new stream to process. You also need to deploy your cat recognition model and maybe deploy a RTSP server that handles the input of the video stream and pass it to the processing pipeline, and finally send you the edited video.

What started by simply using a public cat recognition model, ended up becoming really hard by virtue of having to set up ‘everything else’ around it.

Here is where Pipeless comes in. It takes care of all the grunt work, automatically. By using Pipeless all you need to do for the above case is to take an RGB image, pass it through the model, draw the square and voila. You just saved some weeks of your life! This is exactly what was shown at the beginning of the article under the demo section.

Pipeless Architecture

Pipeless is designed to be easy to deploy in the cloud but also being able to run it locally with a single command. It has three main independent components, and all the components are communicated via messages using the lightweight Nanomsg Next generation Library (NNG):

Input: takes care of reading/receiving the streams, decode and demux them into single frames. It sends raw frames to the workers to be processed following a round robin schedule.

Worker: takes care of executing the user’s applications (your applications). You can deploy any number of workers to process frames in parallel, this helps to increase the stream framerate when processing in real-time and distribute the “heavy” load over different computers.

Output: takes care of receiving the processed raw streams, encode them and mux them into the output container format, for example mp4. It allows you to send the video to an external URI, play it directly on the device screen or store it on a file.

In addition, Pipeless doesn’t have too many dependencies, you just need a Gstreamer and Python installed in your system. Find the detailed list of requirements and versions here.

Roadmap

Right now Pipeless is supporting a limited set of functionality, however, we are actively working to add more every day.

You can learn more about the currently supported input and output formats on the Current State section of the readme. Do you need a new one? Create a pull request or ask for it creating a GitHub issue.

The current version is the very first one, and doesn’t support automatically deploying apps to production, however, this is one of the main features we will bring very soon. So, stay tuned!

miguelaeh

/

pipeless

miguelaeh

/

pipeless

A framework to build and deploy multimodal perception apps in minutes without worrying about multimedia pipelines

Pipeless

Pipeless is an easy to use open source multimedia framework with a focus on AI. Developers can build and deploy apps that manipulate audio and video in just minutes by allowing them to concentrate on the code, not on building and maintaining processing pipelines.

For example, you can build thing like:

cats-output.mp4

With just with these simple lines of code:

Index 📚

- Requirements

- Installation

- Getting started

- Current State

- Troubleshooting

- Examples

- Contributing

- License

Requirements ☝️

- Python (tested with version

3.10.12) -

Gstreamer 1.20.3. Verify with

gst-launch-1.0 --gst-version. Installation instructions here

Note about macOS

The latest version of macOS (Ventura) comes with Python 3.9 by default. You can install version 3.10 with:

brew install python

Also, to install Gstreamer in macOS use the following instead of the upstream instructions to ensure all the required packages are installed:

brew…

Top comments (6)

Interesting.... can Pipeless capture frames from the webcam?

Not right now, but it is something we can add really easily. If that's valuable I will be happy to add it. You can find here all the currently supported formats for input and output.

It already supports to show the processed video directly on the screen for testing purposes, so maybe it has sense to provide an option to read from the webcam too.

I would be useful... thanks!

Thanks for the feedback! Will add that feature and let you know!

@sreno77 I added the feature you requested in case you want to give it a try. There is only one restriction, if you read the input from the webcam you need to show the output on the screen. This is because to store in mp4 it requires to create the moov, which is done at the end of the stream, since reading from the webcam there is not an end of the stream the moov is never created. this restriction will be removed as we support more video formats.

So, just set the input video URI in the config to

v4l2and the output video URI toscreen. Let me know if there is anything else you would like to do or if you would like to talk about your specific use case. I would be really interested in understanding it.Thanks for the hard work!