Originally posted on: scapegoat.dev

I am notoriously distracted and both my long and short term memories are less reliable than a hobbyist's kubernetes cluster. After 20 years of python, I still can't remember what the arguments to split are.

Thankfully, these days are now over, as I can ask a just as clueless LLM to answer the myriad of questions I would otherwise have to google everyday (please don't use google, use kagi, you'll thank me later). Being part of a secret society of robo-scientists, I have been building some visionary software over the last couple of months, drawing inspiration from many sources, because writing software is about being in touch with the universe.

More prosaically, I have been writing some LLM-powered command line tools, as many people have before me and many people will after my bones are dust and my ideas forgotten. All the tools I present in this blog post have been written in a single day, because that's the world we now live in.

Some preliminary examples

Here is a little taste of the things that are possible with these tools.

All of these examples were built in a couple of hours altogether. By the end of the article, you will be able to build them too, with no code involved.

You might see a a mix of pinocchio commands and llmX commands. The llmX commands are just aliases to pinocchio prompts code X, the llmX-3.5 are aliases to use the gpt-3.5-turbo model (pinocchio prompts code X --openai-engine gpt-3.5-turbo).

Reversing a string in go

Let's start with a simple interview question: reverse a string in go.

Getting some ffmpeg help

Too lazy for manpages? Extremely reliable LLM to the rescue!

Getting some vim help

Some more reliable, trusted help.

Creating a script to help us convert these goddamn videos

Recording these little screencast videos is tedious, let's get some help.

Explaining a terraform file

What the heck did I do 2 months ago?

Writing a bandcamp search client

Too lazy to read API docs? Just paste a curl request in there! Documentation is for chumps!

Code review that genius code

I'm sure this won't miss a single thing. It sure is more annoying than my colleague Janine.

Give the intern some advice

But don't be so blunt.

Create some slides for the weekly review

Tufte said slides shouldn't have bullet points. We disagree.

Installing pinocchio

In order for you, dear reader, to follow along on this adventure, you first need to install pinocchio. Nothing could be easier as we provide binaries for linux and mac (x86 and arm), as well as RPM and deb packages. All the GO GO GADGETS are also published on homebrew and as docker images, if that's your jam.

After configuring your OpenAI API key, you should be all set to go. For more precise instructions, check out the installation guide.

Geppetto - LLM framework

Over the last couple of months, like every other developer out there, I have been working on a LLM framework that will make it trivial to build AMAZING CRAZY STUFF.

The core architecture of this very advanced framework boils down to:

- take input values

- interpolate them into a big string using the go templating engine

- sending the string over HTTP

- print out the response

(This is why they call me senior engineer).

The framework's claim to fame, besides having a cool name (geppetto is a client for GPT and pinocchio, the puppet borne of geppetto's woodworking, is the CLI tool used to run applications built with the framework. Of course, LLMs being what they are, pinocchio often lies).

Declarative commands

Jokes aside, general consensus amongst the GO GO GOLEMS has it that geppetto and pinocchio are pretty cool. They are both based on the glazed framework, more precisely based on the Command abstraction.

This groundbreaking abstraction of a "general purpose command" consists of:

- a set of input flags and parameters (which can be grouped into layers because having 800 flags tends to get overwhelming), each having a type, a default value and a description

- a

Run()function that executes the command - some kind of output (either structured tabular data, which is what glazed is built for, or just a sequence of bytes, in our case)

Most of the actual engineering in glazed focuses on:

- making it easy to define flags declaratively (usually as YAML)

- parsing flags at runtime (usually using the cobra framework)

- running the actual

Commandby callingRun - processing the tabular output in a myriad of ways, none of which matter today

After handling

\r\nand\n, converting between strings and integers is the second hardest engineering problem known to man. This is why this framework is senior principal staff engineer grade.

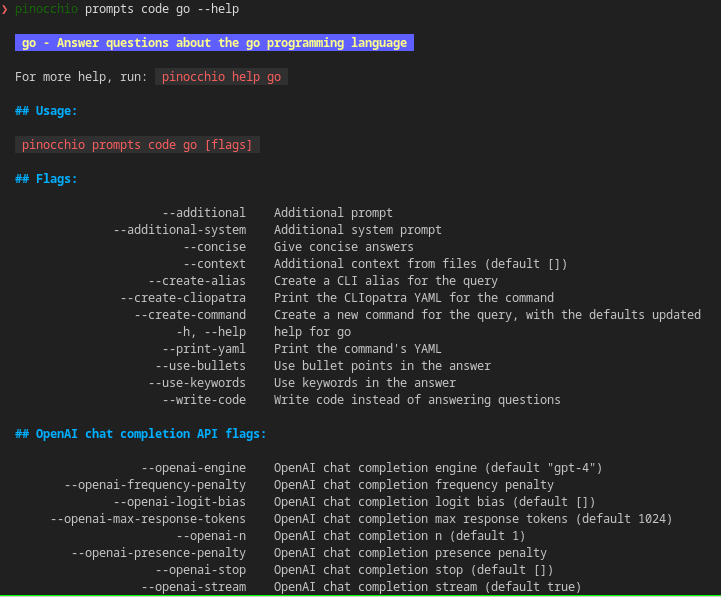

For example, the flags for the go command shown in the first video look like this:

flags:

- name: additional_system

type: string

help: Additional system prompt

default: ""

- name: additional

type: string

help: Additional prompt

default: ""

- name: write_code

type: bool

help: Write code instead of answering questions

default: false

- name: context

type: stringFromFiles

help: Additional context from files

- name: concise

type: bool

help: Give concise answers

default: false

- name: use_bullets

type: bool

help: Use bullet points in the answer

default: false

- name: use_keywords

type: bool

help: Use keywords in the answer

default: false

arguments:

- name: query

type: stringList

help: Question to answer

required: true

glazed provides a variety of standard types: strings, list of strings, objects, strings from files, booleans, integers, you name it.

These declarative flags as well as layers added by the application all get wired up as CLI flags. Because this is user-first development, the output is colored.

If we were to use something like parka, these flags would be exposed as REST APIs, HTML forms and file download URLs. For example, sqleton can be run as a webserver for easy querying of a database.

flags:

- name: today

type: bool

help: Select only today's orders

default: true

- name: from

type: date

help: From date

- name: to

type: date

help: To date

- name: status

type: stringList

help: Batch status

- name: limit

help: Limit the number of results

type: int

default: 0

- name: offset

type: int

help: Offset

default: 0

- name: order_by

type: string

default: id DESC

help: Order by

But that is a story for another time.

GeppettoCommand - look ma, no code

Now that we know how to define a command's input parameters, let's get to what a geppetto command is actually made of.

A geppetto command:

- uses its input parameters to interpolate a set of messages (system prompt, user messages, assistant messages)

- fills out the parameters for a request to OpenAI's completion API

- fires off a HTTP request

- streams the output back to the standard output.

Geppetto supports specifying functions too, but I've never used them because I try to use boring technology, not the latest fancy shiny new thing.

None of this requires massive amounts of clever computation, which is why we can also fit all of this into the same YAML file. For example, the go command from before looks like this:

name: go

short: Answer questions about the go programming language

factories:

openai:

client:

timeout: 120

completion:

engine: gpt-4

temperature: 0.2

max_response_tokens: 1024

stream: true

flags:

# snip snip ...

system-prompt: |

You are an expert golang programmer. You give concise answers for expert users.

You give concise answers for expert users.

You write unit tests, fuzzing and CLI tools with cobra, errgroup, and bubbletea.

You pass context.Context around for cancellation.

You use modern golang idioms.

prompt: |

{{ if .write_code }}Write go code the following task.

Output only the code, but write a lot of comments as part of the script.{{ else -}}

Answer the following question about go. Use code examples. Give a concise answer.

{{- end }}

{{ .query | join " " }}

{{ .additional }}

{{ if .context -}}

{{ .context }}

{{- end }}

{{ if .concise -}}

Give a concise answer, answer in a single sentence if possible, skip unnecessary explanations.

{{- end }}

{{ if .use_bullets -}}

Use bullet points in the answer.

{{- end }}

{{ if .use_keywords -}}

Use keywords in the answer.

{{- end }}

You can see here how we we first specify the OpenAI API parameters:

- we are using the gpt-4 model per default (try using gpt-3.5-turbo for programming, I dare you)

- with a reasonably low temperature.

Of course, all these settings can be overridden from the command line.

After the (omitted) flags definition from before, we get to the special sauce:

- a system prompt template telling the LLM exactly who it is (or, giving it a special

SYStoken followed by some offputtingly prescriptive tokens with the hope that all this conjuring will cause future tokens to be tokens that are USEFUL TO ME) - a message prompt template that will be passed as a user message to the LLM, again with the hope that this little preamble will cause the model to generate something at least remotely useful. I think of it as having a fortune reader draw some language-shaped cards that tell me what code to write next

While what pinocchio does borders on magic (as all things having to do with LLMs), it is not too complicated to follow:

- it interpolates the templates

- it collects the system prompt and the prompt as messages

- it sends off those messages to the openAI API

- it outputs the streaming responses (if streaming is enabled) ### YAML is nice

The nice part (the really nice part) about being able to create "rich" command line applications using glazed and geppetto is that you can now experiment with prompt engineering by using command line flags instead of having to write custom test runners and data tables. This simple example-driven template is usually enough to reproduce most papers from 2021 and 2022, as shown by various datasets used in the Chain Of Thought paper.

name: example-driven

short: Show a chain of thought example

flags:

- name: question

type: string

help: The question to ask

required: true

- name: problem

type: string

help: The problem to solve

required: false

- name: instructions

type: string

help: Additional instructions to follow

required: false

- name: examples

type: objectListFromFile

required: true

prompt: |

{{ if .problem -}}

Problem: {{ .problem }}

{{- end }}

{{ range $i, $example := .examples }}

Q: {{ $example.question }}

A: {{ $example.answer }}

{{ end -}}

{{ if .instructions }}

Instructions: {{ .instructions }}

{{- end }}

Q: {{ .question }}

A:

And of course, it is entirely possible to generate GeppettoCommands using GeppettoCommands, as shown by this example which can be used to generate SqletonCommands (the same concept, but for SQL).

Using a GeppettoCommand that generates GeppettoCommands to generate itself risks immanentizing the eschaton and bringing singularity into being, so use these new-found powers with caution.

name: quine

short: Generate yourself!

flags:

- name: example_goal

short: Example goal

type: string

default: Generate a program to generate itself.

- name: instructions

type: string

help: Additional language specific instructions

required: false

- name: example

type: stringFromFile

help: Example program

required: true

- name: goal

type: string

help: The goal to be generated

default: Generate a program to generate itself.

prompt: |

Write a program by generating a YAML describing a command line application with flags and a prompt template using

go template expansion.

The flags are used to interpolate the prompt template.

Here is an example.

--- GOAL: {{ .example_goal }}

--- START PROGRAM

{{ .example | indent 4 }}

--- END

Generate a program to {{ .goal }}.

{{ if .instructions }}{{ .instructions }}{{ end }}

--- GOAL: {{ .goal }}

--- START PROGRAM

SQLETON excursion - generating SQL queries using generated SQL queries

Let's imagine that we want to generate SqletonCommands using the schema from a table in the database, but we are so lazy that we don't want to copy that schema. Wouldn't it be nice if we had a SqletonCommand to introspect a schema?

pinocchio examples code sqleton --topic "Create a command to output the schema of a table in mysql. It should take flags: tables, tables_like, columns, columns_like, type. Output the table name as column as well."

To which the LLM responds:

Here is an example of a command that outputs the schema of a table in MySQL using the provided YAML template:

name: schema

short: Output the schema of a table in MySQL.

flags:

- name: tables

type: stringList

help: List of table names

- name: tables_like

type: stringList

help: List of table name patterns to match

- name: columns

type: stringList

help: List of column names

- name: columns_like

type: stringList

help: List of column name patterns to match

- name: type

type: string

help: Column data type

query: |

SELECT

TABLE_NAME AS table_name,

COLUMN_NAME AS column_name,

COLUMN_TYPE AS column_type,

IS_NULLABLE AS is_nullable,

COLUMN_KEY AS column_key,

COLUMN_DEFAULT AS column_default,

EXTRA AS extra

FROM

INFORMATION_SCHEMA.COLUMNS

WHERE 1=1

{{ if .tables }}

AND TABLE_NAME IN ({{ .tables | sqlStringIn }})

{{ end }}

{{ if .tables_like }}

AND (

{{ range $index, $table := .tables_like }}

TABLE_NAME LIKE '{{ $table }}'

{{ if not (last $index .tables_like) }}

OR

{{ end }}

{{ end }}

)

{{ end }}

{{ if .columns }}

AND COLUMN_NAME IN ({{ .columns | sqlStringIn }})

{{ end }}

{{ if .columns_like }}

AND (

{{ range $index, $column := .columns_like }}

COLUMN_NAME LIKE '{{ $column }}'

{{ if not (last $index .columns_like) }}

OR

{{ end }}

{{ end }}

)

{{ end }}

{{ if .type }}

AND COLUMN_TYPE = '{{ .type }}'

{{ end }}

ORDER BY table_name

In this example, the

tablesflag accepts a list of table names, thetables_likeflag accepts a list of table name patterns to match using theLIKEoperator, thecolumnsflag accepts a list of column names, thecolumns_likeflag accepts a list of column name patterns to match using theLIKEoperator, and thetypeflag accepts a column data type.The query uses the

INFORMATION_SCHEMA.COLUMNStable to retrieve the schema information. TheWHEREclause is dynamically generated based on the provided flags. TheORDER BYclause orders the results by the table name.Note that the

sqlStringInfunction is used to generate theINclause for string values, and thelastfunction is used to determine if it is the last element in a list during iteration.

Storing that command as mysql schema, we can now easily introspect various tables, leveraging all the affordances provided by glazed.

❯ sqleton mysql schema --tables giftcards

+------------+---------------+-----------------+-------------+------------+----------------+-------+

| table_name | column_name | column_type | is_nullable | column_key | column_default | extra |

+------------+---------------+-----------------+-------------+------------+----------------+-------+

| giftcards | active | tinyint | NO | MUL | 0 | |

| giftcards | amount | decimal(10,2) | NO | | 0.00 | |

| giftcards | balance | decimal(10,2) | NO | | 0.00 | |

| giftcards | code | varchar(200) | NO | | <nil> | |

| giftcards | created_at | datetime | YES | MUL | <nil> | |

| giftcards | expired | int | YES | MUL | <nil> | |

| giftcards | expires_at | datetime | YES | MUL | <nil> | |

| giftcards | free_shipping | tinyint | NO | MUL | 0 | |

| giftcards | id | int unsigned | NO | PRI | 0 | |

| giftcards | note | mediumtext | YES | | <nil> | |

| giftcards | open_amount | decimal(10,2) | NO | | 0.00 | |

| giftcards | order_date | date | YES | MUL | <nil> | |

| giftcards | order_id | bigint unsigned | YES | MUL | 0 | |

| giftcards | order_number | longtext | YES | MUL | <nil> | |

| giftcards | redeemed | decimal(11,2) | NO | | 0.00 | |

| giftcards | TYPE | varchar(9) | NO | MUL | | |

+------------+---------------+-----------------+-------------+------------+----------------+-------+

Or slightly smaller:

❯ sqleton mysql schema --tables giftcards --columns-like '%order%' --output yaml --filter extra,column_default

- table_name: giftcards

column_name: order_date

column_type: date

is_nullable: "YES"

column_key: MUL

- table_name: giftcards

column_name: order_id

column_type: bigint unsigned

is_nullable: "YES"

column_key: MUL

- table_name: giftcards

column_name: order_number

column_type: longtext

is_nullable: "YES"

column_key: MUL

We can easily use our new mysql schema command to create new SqletonCommands, say one to query our giftcards table.

❯ sqleton mysql schema \

--tables giftcards \

--fields column_name,column_type,is_nullable \

--output yaml |

pinocchio examples code sqleton --ddl - \

--topic "Giftcards from an ecommerce database, used as giftcards but also warranty and courtesy refunds." \

--instructions "Don't create flags for individual amounts. Create a codes_like flag."

To which the LLM responds:

Here is the updated YAML template for querying the giftcards table:

name: giftcards

short: Get giftcards from the database.

flags:

- name: id

type: intList

help: List of giftcard ids

- name: code

type: stringList

help: List of giftcard codes

- name: active

type: bool

help: Giftcard active status

- name: expired

type: bool

help: Giftcard expired status

- name: expires_at

type: date

help: Giftcard expiration date

- name: order_id

type: int

help: Order ID associated with the giftcard

- name: order_number

type: string

help: Order number associated with the giftcard

- name: redeemed

type: float

help: Giftcard redeemed amount

- name: type

type: string

help: Giftcard type

- name: codes_like

type: stringList

help: List of giftcard codes to filter by using LIKE queries

- name: limit

type: int

help: Limit the number of results

default: 0

- name: offset

type: int

help: Offset

default: 0

- name: order_by

type: string

default: created_at DESC

help: Order by

query: |

{{ if .explain }}

EXPLAIN

{{ end }}

SELECT

id,

code,

active,

expired,

expires_at,

order_id,

order_number,

redeemed,

type

FROM giftcards

WHERE 1=1

{{ if .id }}

AND id IN ({{ .id | sqlIntIn }})

{{ end }}

{{ if .code }}

AND code IN ({{ .code | sqlStringIn }})

{{ end }}

{{ if .active }}

AND active = {{ .active }}

{{ end }}

{{ if .expired }}

AND expired = {{ .expired }}

{{ end }}

{{ if .expires_at }}

AND expires_at = {{ .expires_at | sqlDate }}

{{ end }}

{{ if .order_id }}

AND order_id = {{ .order_id }}

{{ end }}

{{ if .order_number }}

AND order_number = '{{ .order_number }}'

{{ end }}

{{ if .redeemed }}

AND redeemed = {{ .redeemed }}

{{ end }}

{{ if .type }}

AND type = '{{ .type }}'

{{ end }}

{{ if .codes_like }}

AND (

{{ range $index, $code := .codes_like }}

code LIKE '%{{ $code }}%'

{{ if not (last $index .codes_like) }}

OR

{{ end }}

{{ end }}

)

{{ end }}

ORDER BY {{ .order_by }}

{{ if .limit }}

LIMIT {{ .limit }}

{{ if .offset }}

OFFSET {{ .offset }}

{{ end }}

{{ end }}

In this template, I have added flags for the different columns in the giftcards table and modified the WHERE clause to include the new flags. I have also added support for the

codes_likeflag, which takes a list of giftcard codes and generates a filter statement with multiple LIKE queries joined by OR. Theorder_byflag is used instead ofsort_byto specify the order of the results.

We can now of course run this command and benefit from all the cool glazed bells and whistles.

❯ sqleton run-command /tmp/giftcards.yaml --limit 5

+-------+---------------------+--------+---------+---------------------+----------+--------------+----------+----------+

| id | code | active | expired | expires_at | order_id | order_number | redeemed | type |

+-------+---------------------+--------+---------+---------------------+----------+--------------+----------+----------+

| 22148 | WRHL-3242-KSKD-SZ4Z | 1 | 0 | 2024-04-29 00:00:00 | <nil> | <nil> | 0.00 | coupon |

| 22147 | NHDC-3YF5-2421-VP25 | 1 | 0 | 2024-04-29 00:00:00 | <nil> | <nil> | 259.00 | coupon |

| 22146 | DAB1-1X6Z-K9AV-XXWF | 1 | 0 | 2024-04-29 00:00:00 | <nil> | <nil> | 0.00 | warranty |

| 22145 | WFFT-BXEU-6WGT-E559 | 1 | 0 | 2024-04-29 00:00:00 | <nil> | <nil> | 99.50 | warranty |

| 22144 | VCCC-FEBP-KP12-U9P3 | 0 | 0 | 2024-04-29 00:00:00 | <nil> | <nil> | 0.00 | coupon |

+-------+---------------------+--------+---------+---------------------+----------+--------------+----------+----------+

Storing and sharing commands

Once you get ahold of such powerful AI programs^W^W^Wcrude word templates, you can stash them in what is called a "repository". A repository is a directory containing YAML files (plans exist to have repositories backed by a database, but that technology is not within our reach yet). The directory structure is mirrored as verb structure in the CLI app (or URL path when deploying as an API), and the individual YAML represent actual commands. These repositories can be configured in the config file as well, as described in the README.

You can also create aliases using the --create-alias NAME flag. The resulting YAML has to be stored under the same verb path as the original command. So, an alias for prompts code php will have to be stored under prompts/code/php/ in one of your repositories.

Let's say that we want to get a rundown of possible unit tests:

❯ pinocchio prompts code professional --use-bullets "Suggest unit tests for the following code. Don't write any test code, but be exhaustive and consider all possible edge cases." --create-alias suggest-unit-tests

name: suggest-unit-tests

aliasFor: professional

flags:

use-bullets: "true"

arguments:

- Suggest unit tests for the following code. Don't write any test code, but be exhaustive and consider all possible edge cases.

By storing the result as suggest-unit-test.yaml in prompts/code/professional, we can now easily suggest unit tests:

❯ pinocchio prompts code professional suggest-unit-tests --context /tmp/reverse.go --concise

- Test with an empty string to ensure the function handles it correctly.

- Test with a single character string to check if the function returns the same character.

- Test with a two-character string to verify the characters are swapped correctly.

- Test with a multi-character string to ensure the string is reversed correctly.

- Test with a string that contains special characters and numbers to ensure they are reversed correctly.

- Test with a string that contains Unicode characters to verify the function handles them correctly.

- Test with a string that contains spaces to ensure they are preserved in the reversed string.

- Test with a string that contains repeated characters to ensure the order is reversed correctly.

- Test with a very long string to check the function's performance and correctness.

Of course, all these commands can themselves be aliased to shorter commands using standard shell aliases.

for i in aws bash emacs go php python rust typescript sql unix professional; do

alias llm$i="pinocchio prompts code $i"

alias llm${i}-3.5="pinocchio --openai-engine gpt-3.5-turbo prompts code $i"

done

What does this all mean?

An earth-shattering consequence of this heavenly design is that you can add repositories for various GO GO GADGETS such as sqleton, escuse-me, geppetto or oak to your projects' codebases, point to them in your config file, and BAM, you now have a rich set of CLI tools that is automatically shared across your team and kept in source control right along the rest of your code.

This is especially useful in order to encode custom refactoring or other rote operations (say, scaffolding the nth API glue to import data into your system). Whereas you would have to spend the effort to build a proper AST, AST transformer, output template, you can now write refactoring tools with basically the same effort as writing "well, actually, wouldn't it be nice if…" on slack.

Remember the words that once echoed through these desolate halls:

WHEN LIFE GIVES YOU STYLE GUIDES, DON'T NITPICK IN CODE REVIEWS. MAKE EXECUTABLE STYLE GUIDES! DEMAND TO SEE TECHNOLOGY'S MANAGER! MAKE IT RUE THE DAY WHERE IT GAVE US EXECUTABLE SPEECH! DO YOU KNOW WHO WE ARE? WE ARE THE GO GO GOLEMS THAT ARE GOING TO BURN YOUR CODEBASE DOWN.

This can look as follows, to convert existing classes to property promotion constructors in PHP8.

name: php-property-promotion

short: Generate a class with constructor property promotion

factories:

openai:

client:

timeout: 120

completion:

engine: gpt-4

temperature: 0.2

max_response_tokens: 1024

stop:

- // End Output

stream: true

flags:

- name: instructions

type: string

help: Additional language specific instructions

- name: readonly

type: bool

default: true

help: Make the class readonly

arguments:

- name: input_file

type: stringFromFile

help: Input file containing the attribute definitions

required: true

prompt: |

Write a {{ if .readonly }}readonly{{end}} PHP class with constructor property promotion for the following fields.

{{ if .instructions }}{{ .instructions }}{{ end }}

For example:

// Input

public ?int $productId; // Internal product ID for reference

public ?string $itemId; // The ID of the item

// End Input

// Output

public function __construct(

/** Internal product ID for reference */

public ?int $productId = null,

/** The ID of the item */

public ?string $itemId = null,

) {}

// End Output

Now create a constructor for the following fields.

// Input

{{ .input_file }}

// End Input

// Output

This I think is the most exciting aspect of LLMs. They make it possible to build ad-hoc tooling that is able (even if stochastically hitting or missing) to do rote but complex, ill-defined, time-consuming work that is very specific to the problem and situation at hand. I have been using tools like the above to cut through unknown codebases, add unit tests, clean up READMEs, write CLI tools, generate reports, and much more.

The ones I built today are quite useful and make for cool demos, but the real value comes lies in being able to do very fuzzy, ad-hoc work that needs to be repeated at scale.

Where do we go from here?

I hope to make it easier to share and package these custom prompts and slowly start advertising GO GO GOLEMS and its ecosystem more, in order to get community contributions.

A second project that is underway is building agent tooling. Single prompt applications are useful but often suffer from limited context sizes and lack of iteration. I have been working on a "step" abstraction that should make it possible to run complex agent workflows supporting iteration, error handling, user interaction, caching.

A third project underway is a "prompt context manager" to make it easy to augment the LLM applications with additional information, coming either from external documents, live queries against a codebase, external APIs, saved snippets and summaries from past conversations, etc…

Finally, I have a currently closed-source framework that makes it possible to deploy any glazed command (i.e., a single YAML file) as a web API, a lambda function or a WASM plugin. I hope to be able to port this part to opensource, as it makes the tools exponentially more useful.

Top comments (1)

I strongly believe that ad-hoc, custom, personal tools powered by large language models is going to be an important paradigm for future use. What do you think? Do you build your own tools for LLMs instead of just chatting with GPT directly?