(Note, this article continues from Part 1: AWS Metrics: Advanced)

We can't use Prometheus

It turns out Prometheus can't support serverless. Prometheus works by polling your service endpoints fetching data from your database and storing it. For simple things you would just expose the current "CPU and Memory percentages". And that works for virtual machines. It does not work for ECS Fargate, and definitely does not work for AWS Lambda.

There is actually a blessed solution to this problem. Prometheus suggests what is known as a PushGateway. What you do is deploy yet another service which you run, and you can push metrics to. Then later, Prometheus can come and pick up the metrics by polling the PushGateway.

There is zero documentation for this. And that's because Prometheus was built to solve one problem, K8s. Prometheus exists because K8s is way to complicated to monitor and track usage yourself, so all the documentation that you will find is a yaml file with some meaningless garbage in it.

Also we don't want to run another service that does actual things that's both a security concern as well as a maintenance burden. But the reason why PushGateway exists, to supposedly solve the problems of serverless, is confusing, because why doesn't Prometheus just support pushing events directly to it? And if you look closely enough at the AWS console for Prometheus, you might also notice this:

What's a Remote Write Endpoint?

You've got me, because there is no documentation on it.

🗎 Promotheus RemoteWrite Documentation

So I'm going to write here the documentation for everything you need to know about Remote Writing, which Prometheus does support. Although you won't find any documentation anywhere on it, and I'll explain why.

Throughout your furious searching on the internet for how to get Prometheus working with Lambdas and other serverless technology, you will no doubt find a larger number of articles trying to explain how different types of metrics work in Prometheus. But metric types are a lie. They don't exist, they are fake, ignore them.

To explain how remote write works, I need to first explain what we've learned about Prometheus.

Prometheus Data Storage strategy

Prometheus stores time series, that's all it does. A time series has a number of a labels, and a list of values at a particular time. Then later provides those time series to query in an easy way. That's it, that's all there is.

Metric types exist, because the initial source of the metric data doesn't want to think about time series data, so Prometheus SDKs offer a bunch of ways for you to create metrics, the internal SDKs convert those metric types to different time series and then these time series are hosted on a /metrics endpoint available for Prometheus to come by and use.

This is confusing I know. It works for # of events of type A happened at Time B. But it does not support average response time for type T? I'll get to this later.

Handling data transfer

Because you could have multiple Prometheus services running in your architecture, they need to communicate with each other. This is where RemoteWrite comes it. RemoteWrite is meant for you to run your own Prometheus Service and use RemoteWrite to copy the data from one Prometheus to another one.

That's our ticket out of here. We can fake being a Prometheus service and publish our time series to the AWS Managed Prometheus service directly. If we fake being a Prometheus Server then as long as we fit the API used for handling this, we can actually push metrics to Prometheus. 🎉

The problem here is that most libraries don't support even writing to the RemoteWrite url. We need to figure out how to write to this url now that we have it and also how to secure it.

The Prometheus SDK

Luckily in nodejs there is the prometheus-remote-write library. It supports AWS SigV4, which means that we can put this library in a Lambda + APIGateway service and proxy requests to Prometheus through it. It also sort of handles the messy bit with the custom protobuf format. (Remember K8s was created by Google, so everything is more complicated than it needs to be). With APIGateway we can authenticate our microservices to call the metrics microservice we need to build. The API can take the request and use IAM to secure the push to Prometheus. (It's worth noting here, you can actually push from one AWS account to another's Promotheus Workspace, but trying to get this to work is bad for two reasons--you never want to expose one AWS account's infra services to another, that's just a bad architecture, and two trying to get a lambda to assume a role and then pass the credentials correctly to the libraries that need them to authenticate is a huge headache).

And this is the whole code of the service:

const { createSignedFetcher } = require('aws-sigv4-fetch');

const { pushTimeseries } = require('prometheus-remote-write');

const fetch = require('cross-fetch');

const signedFetch = createSignedFetcher({

service: 'aps',

region: process.env.AWS_REGION,

fetch

});

const options = {

url: 'https://aps-workspaces.eu-central-1.amazonaws.com/workspaces/ws-00000000-0000-0000-00000000/api/v1/remote_write',

fetch: signedFetch,

labels: { service: request.body.service }

};

const series = request.body.series;

await pushTimeseries(series, options);

And just like that we now have data in Prometheus...

But where is it?

???

So Prometheus has no viewer, unlike DynamoDB and others, AWS provides no way to look at the data at Prometheus directly. So we have no idea if it is working. The API response tells us 200, but like good engineers we aren't willing to trust that. We've also turned on Prometheus logging and that's not really enough help. How the hell do we look at the data?

At this point we are praying there is some easy solution for displaying the data. My personal thought is this is how AWS gets you, AWS Prometheus is cheap, but you have to throw AWS Grafana on top in order to use it:

And that says $9 per user. Wow that's expensive to just look at some data. I don't even want to create graphs, just literally show me the data.

What's really cool though is Grafana Cloud offers a near free tier for just data display, and that might work for us:

Well at least the free tier makes it possible for us to validate our managed Prometheus service is getting our metrics.

And after way too much pain and suffering it turns out it is!

However, we only sent three metric updates to Prometheus, so why are there so many data points. The problem is actually in the response from AWS Prometheus. If we dive down into the actual request that Grafana is making to Prometheus we can see the results actually include all these data points. That means it isn't something weird with the configuration of the UI:

I'm pretty sure it has to do with the fact that the step size is 15s:

It doesn't really matter that it does this because all our graphs will be continuous anyway and expose the connected data. Also since this is a sum over the timespan, we should absolutely treat this as a minimum a 15s resolution unless we actually do get summary metrics more frequently.

No matter what anyone says, these graphs are beautiful. It was the first thing that hit me when I actually figured out what all the buttons were on the screen.

Quick Summary

- 🗸 Metrics stored in database

- 🗸 Cost effective storage

- 🗸 Display of metrics

- 🗸 Secured with AWS or our corporate SSO

- 🗸 Low TCO to maintain metric population

The Solution has been:

- AWS Prometheus

- Lambda Function

- Grafana Cloud

Some lessons here:

1. The Grafana UX is absolutely atrocious

Most of the awesome things you want to do aren't enabled by default. For instance, if you want to connect Athena to Grafana so that your bucket can be queried, you first have to enable the plugin for Athena in Grafana. And only then can you create a DataSource for Athena. It makes no sense why everything is hidden behind a plugin. The same is true for AWS Prometheus, it doesn't just work out of the box.

Second, even after you do that, your plugin still won't work. The datasource can't be configured in a way that works, that's because the data source configuration options need to be separately enabled by filing a support ticket with Grafana. I died a bit when they told me that.

In our products Authress and Standup & Prosper we take great pride in having everything self-service, that also means our products' features are discoverable by every user. Users don't read documentation. That's a fact, and they certainly don't file support tickets. That's why every feature has a clear name and description next to it to explain what it does. And you never have to jump to the docs, but they are there if you need them. We would never hide a feature behind a hidden flag that only our support has access to.

2. The documentation for Prometheus is equally bad

Since Prometheus was not designed to be useful but instead designed to be used with K8s, there is little to no documentation using Prometheus to do anything useful. Everyone assumes you are using some antiquated technology like K8s and therefore the metrics creation and population is done for you. So welcome to pain, but at least now there is this guide so you too can effectively use Prometheus in a serverless fashion.

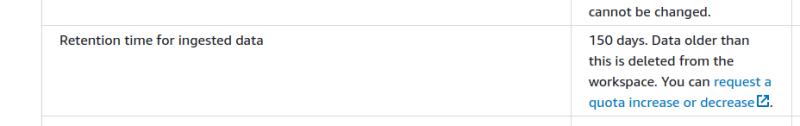

3. Always check the AWS quotas

The default retention is 150 days but this can be increased. One of the problems with AWS CloudWatch is that if you mess up you have 15 months of charges. But here we start with only 6 months. That's a huge difference. We'll plan to increase this, and I'm sure it will be configurable by API later.

Just remember you need to review quotas for every new service you start using so you don't get bitten later.

4. Lacks the polish of a secure solution

Grafana needs to be able to authenticate to our AWS account in order to pull the data it needs. It should only do this one of two ways:

- Uses the current logged-in Grafana user's OAuth token to exchange for a valid AWS IAM role

- Generates an OIDC JWT that can be registered in AWS

And yet we have...

It does neither of these. Nor does it support dynamic function code to better support this. Sad. We are forced to use an AWS IAM user with an access key + secret. Which we all know you should never ever use. And yet we have to do it here.

I will say, there is something called Private DataSource Connections (PDC), but it isn't well documented if this actually solves the problem. Plus if it did, that we means we'd have to write some GO, and no one wants to do that.

5. Prometheus metric types are a lie

Earlier I mentioned that perhaps you want metrics that are something other than a time series. The problem is Prometheus actually doesn't support that. That's confusing because you can find guides like this Prometheus Metric Types which lists:

- Counter

- Gauge

- Histogram

- Summary

- etc...

Also you'll notice that pathetic lack of libraries in nodejs and Rust.

How can these metric types exist in a time series way? The truth is they can't. And when Prometheus says you can have these, what it really means is that it will take your data and mash it into a time series even if it doesn't work.

A simple example is API Response Time. You could track request time in milliseconds, and then count the number of requests that took that long. There would be a new timeseries for ever possible request time. That feels really wrong.

- 5 requests at 1ms

- 6 requests at 2ms

- 1 requests at 3ms

- ...

But that's essentially how Prometheus works. We can do slightly better, and the solution here is to understand how the Histogram configuration works for Prometheus. What actually happens is that we need to a priori decide useful buckets to care about. For instance, we might create:

- Less than 10ms

- Less than 100ms

- Less than 1000ms

- ...

Then when we get a Response Time, we add a ++1 to each of the buckets that it matches. A 127ms request would only be in the 1000ms bucket, but a 5ms would be in all three.

Later when querying for this data you can filter on the buckets (not the response time) that you care about. Which means something like 1000 10ms step buckets may make sense or

- N buckets in 1ms steps from 1-20ms

- M buckets in 5ms steps from 20-100ms

- O buckets in 20ms steps from 100-1000ms

- P Buckets in 1s steps from 1000ms+

That's about ~100 buckets to keep track off. Depending on your SLOs and SLAs you have you might need SLIs that are different granularities.

🏁 The Finish Line

We were almost done and right before we were going to wrap up we saw this error:

err: out of order sample.

What the hell does that mean? Well it turns out that Prometheus cannot handle timestamp messages out of order. What the actual hell!

Let me say that again, Prometheus does not accept out of order requests. Well that's a problem. It's a problem because we batch our metrics being sent. And we are batching them because we don't have all the data available. And we don't have it because CloudWatch doesn't send it to us all at once nor in order

We could wait for CloudWatch the requisite ~24 hours to update the metrics. But there is no way we are going to wait for 24 hours. We want our metrics as live as possible, it isn't critical that they are live, but there is no good reason to wait. If the technology does not support it then it is the wrong tech (does it feel wrong yet, eh, maybe...). The second solution is use the hack flag out_of_order_time_window that Prometheus supports. Why it doesn't support this out of the box makes no sense. But also turns out things like mySQL and ProstreSQL didn't support updating a table schema without a table lock for the longest time. The problem is that at the time of writing AWS does not let us set this [out_of_order_time_window flag](https://prometheus.io/docs/prometheus/latest/configuration/configuration/#tsdb.

That only leaves us with ~two solutions:

- ignore out of order processing, and drop these metrics on the floor

- ignore the original timestamp of processing the message and just publish

nowas the timestamp on every message.

Guess which one we decided to go with.... That's right, we don't really care about the order of the metrics. It doesn't matter if we got a spike exactly at 1:01 PM or 1:03 PM, most of the time, no one will see notice this difference or care. When there is a problem we'll trust our actual logs way more than the metrics anyway, metrics aren't the source of truth.

And, I bet you thought that is what I was going to say. And it's actually true, we would be okay with that solution. But the problem is that this STILL DOES NOT FIX PROMETHEUS. You still will end up with out of order metrics, and so the solution for us was add the CloudFront logs filename as a unique key as a label in Prometheus, so our labels look like this:

labels: {

__name__: 'response_status_code_total',

status_code: statusCode,

method: method,

route: route,

account_id: accountId,

_unique_id: cloudFrontFileName

},

Remember Labels are just the Dimensions in CloudWatch, they are how we will query the data. And with that, now we don't get any more errors. Because out of order data points only happen within a single time series. By specifying the _unique_id this causes the creation of one time series per CloudFront log file. (Is this okay thing to do? Honestly it's impossible to tell because there is zero documentation on how exactly this impacts Prometheus at scale. Realistically there are a couple of other options to improve upon this like having a random _unique_id (1-10) and retrying with a different value if it doesn't work. This would limit the number of unique time series to 10.)

Further, since we "group" or what Grafana calls "sum" by the other labels anyway, the extra _unique_id label will get automatically ignored:

And the result is in!

And here's the total cost:

$0.00, wow!

If you liked this article come join our Community and discuss this and other security related topics!

Top comments (0)